Introduction

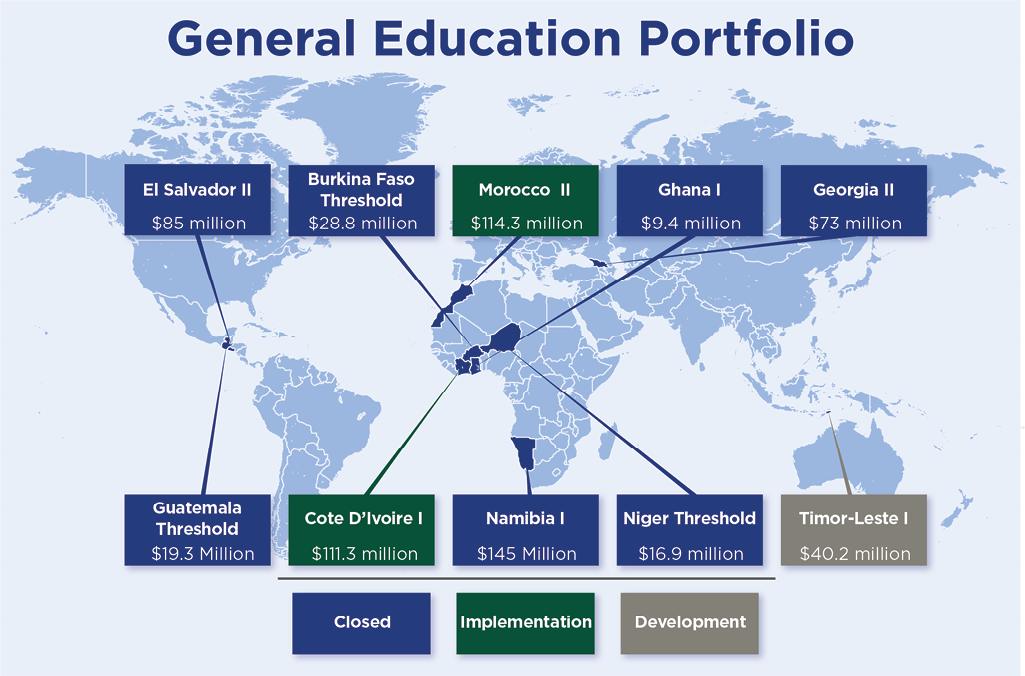

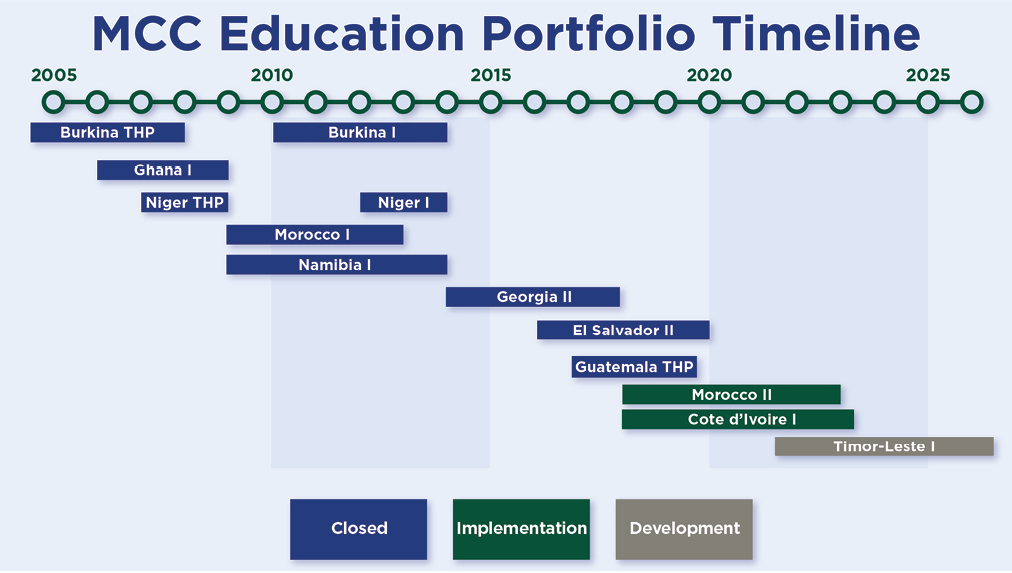

Since 2005, MCC has invested $628 million in Education and Workforce Development interventions at almost every level of education (from primary through tertiary and adult continuing education), formal and non-formal, and in education that results in both academic and technical credentials. Additionally, MCC’s programming has targeted both infrastructure as well as non-infrastructure investments. These investments have spanned 10 countries in Central America, North and West Africa, Asia, and Eastern Europe.

Independent evaluations are integral to MCC’s commitment to accountability, learning, transparency, and evidence-based decision making. The general education evaluation portfolio covers interventions implemented from 2005-2022. MCC published its first general education evaluation findings in 2011. The Insights from General Education Evaluations Brief reviews and synthesizes MCC’s findings from its independent evaluations in general education, covering investments in both primary and secondary education. The evaluation results are supplemented with lessons learned developed by MCC staff. MCC plans to conduct a deeper analysis of lessons learned for this sector, which will be published in a forthcoming Principles into Practice paper. The 2020 Principles into Practice: Training Service Delivery for Jobs and Productivity reviewed MCC’s lessons in technical and vocational education and training.

Key Findings

There a several common findings across the evaluation documents, which are further discussed in the next section. These findings are important for MCC to reflect on for design, implementation, and evaluation of future general education investments. The forthcoming Principles into Practice paper will build on these findings and develop next steps for the Agency.

Evaluations show initial infrastructure investments result in significant improvements to access to schools and improved learning environments. MCC has also developed more accurate cost and schedule projections for its school infrastructure projects, which has allowed for improved project management. However, maintenance practices and funding remain challenges for sustainability.

MCC’s impact on learning in early interventions was mainly driven by attendance and/or enrollment, which would make sense where access to schooling was identified as a problem. However, there was a mismatch in several early programs which included improving education quality in their objectives while the intervention components focused on expanding access.

MCC has been able to use experimental and quasi-experimental methods to evaluate the impacts of a large share of its education investments, providing rigorous evidence in key areas. Nevertheless, because of the breadth and bundled design of many education projects, many of MCC’s impact evaluations estimated the impact of the packaged interventions rather than the differential impact of specific components of a program. When there is demand to validate causal links in the project logic in a way that results can be attributed to the project, project interventions should be designed in a way that facilitates impact evaluation of those specific interventions.

“Girl-friendly” infrastructure seems to increase attendance for girls, but the evaluations were not able to identify which specific components of intervention packages led to that increase.

Understanding the context within the larger education system is critical to project success. In Georgia, MCC implemented the Teacher Training Activity concurrent with key reforms and the kick-off of the World Bank supported professional development scheme. The timing of implementation was not planned ex-ante, but the reforms and professional development scheme strengthened MCC’s progress towards its objectives.

Takeaways from MCC’s Independent Evaluations

MCC conducts independent evaluations of all projects, which serve as a significant source of learning for the agency and its stakeholders. Independent evaluations assess whether MCC’s investment was implemented according to plan, if the investment produced the intended results, and why or why not. MCC’s evaluations strive to examine if the intervention effectively addressed the problem it targeted. The following section summarizes the key findings of the five general education evaluations MCC has published, along with a brief description of the program interventions and objective(s) evaluated.

The Agency’s independent evaluation findings highlight successes as well as areas for improvement in project design and implementation and in how the agency evaluates its projects. The findings show that MCC has been successful in reducing barriers to school enrollment, particularly for female students, and improving school infrastructure. The evaluation findings also demonstrate that MCC’s early general education projects, like other sectors, lacked clearly defined program logics and analytical frameworks that mapped well-defined problems to proposed interventions. Many of the early projects, for which MCC has evaluation results, tended to focus primarily on school infrastructure and textbooks as opposed to issues such as governance and policy reforms necessary to improve the learning curve. In addition, MCC invested in wide ranging programs that tried to tackle numerous problems within the education system in a partner country, which spread limited resources over too many activities and made them difficult to implement.

This review of MCC’s past evaluations also revealed that the Agency was often unable to assess the impact of individual components of its education projects, such as teacher training, as compared to improved infrastructure. And MCC’s evaluators also found that the projects often lacked sufficient documentation of the details of project objective and logic models. In addition, it was sometimes difficult to find documentation of early Threshold programs that were implemented by other donors. Further, MCC has faced challenges with outcome indicator identification and measurement that matched the outputs. For example, when MCC’s stated objective, as defined by MCC and country teams, was to improve access to schooling, it would have been more appropriate to select indicators related to enrollment and attendance rather than learning.

Burkina Faso Threshold BRIGHT & Compact BRIGHT II (2005-2012)

The Burkinabe Response to Improve Girls’ Chances to Succeed (BRIGHT II) Project was designed to reduce barriers to student enrollment, particularly girls’ enrollment, by expanding the number of classrooms constructed with durable materials and adding girl-friendly infrastructure, such as separate latrines for girls and boys.

The first phase of the project (BRIGHT I) operated from 2005 to 2008 under the Burkina Faso Threshold Program and consisted of constructing primary schools with three classrooms (for grades 1 through 3) in addition to offering daily meals, take-home rations for girls with over 90% attendance rates, textbooks, a mobilization campaign to support girls’ education, adult literacy programs, and capacity building for local school officials. MCC and the government of Burkina Faso decided to extend the BRIGHT program from 2009 to 2012 and devoted $28.8 million in funding from Burkina Faso Compact to the project. The second phase of the program—BRIGHT II—included the construction of three additional classrooms for grades 4 through 6 in the original 132 villages and continued the complementary interventions begun during the first three years of the program.

The final evaluation seven used a Regression Discontinuity Design (RDD) to compare children in the 132 villages selected for BRIGHT (participant group) with children in 155 villages that applied to participate in BRIGHT but were not eligible (comparison group). Final data collection occurred ten years after the start of the program. The RDD allowed the evaluator to determine if any changes observed were caused by MCC’s interventions as opposed to other factors. The causal analysis took advantage of the fact that all the villages that applied to the program were given an eligibility score by the Burkina Faso Ministry of Education based on their potential to improve girls’ educational outcomes. The evaluation compared villages that scored just high enough to receive the program to those that scored just below the level necessary to receive it. The analysis assumed that both observable and unobservable differences between villages just around the cut-off point would be minimal.

10 years after the program ended, self-reported data showed that both female and male students in the treatment villages were 6 percentage points more likely to be enrolled in school than those in the comparison villages. The average for the Comparison villages was 31.9 percent. The improvements in enrollment drove improved levels of learning, but at the same rates as other schools. Rather, the learning curve in communities that received schools was comparable to similar villages that had schools. To improve the rate of learning, interventions targeted at improving the quality of education would have been necessary.

Lessons from the BRIGHT evaluations show that early consideration should be given to the sustainability of any activities that will require institutional support in the longer-term. While impactful, the additional package of complementary interventions was not replicated or scaled by the Government. MCC also learned that interventions targeting girls can help reduce the likelihood of early marriage and improve educational outcomes. The evaluation showed a 6.3 percentage point decrease in the marriage rates of female respondents ages 13-22. 39 percent of female respondents in the Comparison group reported that they were currently married as compared to 32.7 percent in the Treatment group. This decrease was statistically significant and suggests that increase female enrollment in schools drove the reduction in early marriage.

Finally, because the BRIGHT and BRIGHT II interventions were implemented in all the communities, and because all treatment sites received all the interventions, it was not possible to estimate the impacts of different project components. Given that the program was not replicated or scaled by the government, most likely because of financial considerations, identifying the most efficient (lowest cost, highest impact) interventions could have been valuable information for future programming.

Ghana I Community Services Education Sub-Activity (2007-2012)

The $9.4 million Education sub-Activity, which included the rehabilitation or construction of 221 schools in Ghana’s Afram, Northern, and Southern zones, aimed to improve school access through investing in education infrastructure. The sub-Activity took place under the broader Community Services Activity that was designed to complement agriculture investments with education, water and sanitation, and rural electrification. The underlying logic of the sub-Activity was that better access to schools would then lead to improvements in enrollment, attendance, and school completion.

The ex-post evaluation combined qualitative case studies with a school conditions survey implemented 7-9 years after construction. The evaluation found that MCC-funded schools were in better condition than other schools four years after the completion of the project. However, there were also differences in conditions between MCC-funded in schools across the three zones, with MCC schools in the relatively affluent Southern and Afram zones school infrastructure conditions better than MCC schools in the Northern zone, where poverty rates are higher. In addition, despite the initial improvements in the physical infrastructure of MCC schools, there were sustainability risks due to a lack of funding and community buy-in for infrastructure maintenance. Respondents in Key Informant Interviews reported both challenges in applying for maintenance funds from the central government and, that local leaders and the community on a whole did not act when school facilities needed repairs.

Respondents in the follow-up survey from both MCC and non-MCC schools across all zones felt that the investments in school infrastructure helped enrollment, attendance, completion, and learning. However, it should be noted that the survey responses demonstrate community perceptions of schooling, and do not reflect the results of student learning assessments.

Finally, there was no documented problem statement or clear program logic for the Ghana Education sub-Activity. Because of the lack of problem and objective definition, and that the ex-post evaluation was implemented years after implementation, it was hard to evaluate the long-term outcomes that were theoretically associated with the intervention. In more recent years, MCC has improved its problem definition and linkage between the project implementation timeline and that of the independent evaluation, which has resolved some of the issues related to measuring the impact of the Ghana I sub-Activity.

Niger Threshold IMAGINE and NECS Project (2008-2013)

The main intervention of the $12 million Niger Improve the Education of Girls in Niger (IMAGINE) Project was the construction of girl-friendly primary schools in villages where classes were held in the open or in thatched roof buildings. The school construction was intended to increase girls’ enrollment and attendance and, in turn, improve learning outcomes. Complementary activities to improve girls’ primary education were planned, however, most of those activities were canceled following the suspension of the Threshold program by MCC’s Board of Directors in 2009 due to political events that were inconsistent with the criteria used to determine a country’s eligibility for MCC assistance. In June 2011, MCC approved the reinstatement of threshold program assistance to Niger, which included $2 million for the Niger Education and Community Strengthening (NECS) project to (i) to increase access to quality education through a variety of investments including borehole construction and maintenance, community engagement and mentoring programs, and promotion of gender-equitable classrooms and student leadership activities; and (ii) to increase student reading achievement by implementing an ambitious early grade reading curriculum.

The evaluation of IMAGINE used random assignment at the village level and collected final data in 2013, three years after school construction was completed. The three-year evaluation study compared villages that received the IMAGINE program and those that did not. The subsequent evaluation of NECS maintained the random assignment under IMAGINE, and randomly selected additional schools from the Control group to receive the complimentary activities without infrastructure. Thus, the final evaluation compared the 60 villages that received both IMAGINE and NECS interventions, 82 villages that received only NECS, and 50 Control villages. Final data collection took place from 2015-2016.

The IMAGINE Project had a large and significant impact on girls’ enrollment, attendance, and test scores. In 2013, girls in the Treatment group were 11.8 percentage points more likely to be enrolled in school than those in the Control group and 10.5 percentage points less likely to have missed more than two consecutive weeks during the last year. Girls in the Treatment group also scored 0.18 standard deviations higher on the math assessments than those in the Control group, a relatively large difference for learning assessments. Overall, children in treatment villages reported being absent 2.72 days fewer in the month prior to the survey than those in the Control villages. Thus, the IMAGINE evaluation findings demonstrate that the girl-friendly infrastructure had an initial positive effect on girls schooling.

The final evaluation in 2016 compared children in villages that received both IMAGINE and NECS, only NECS, and the control group. The evaluation found that compared to children in the control group villages, both boys and girls in NECS & IMAGINE villages and in the NECS-only villages were more likely to report school attendance on the most recent day the school was open. Fifty two percent of children in the control villages reported attending school on the most recent day, and this figure was 13.6 percentage points higher for children in NECS & IMAGINE villages and 11.1 percentage points better for the NECS only villages. The evaluation of NECS did not demonstrate differential impacts on girls and boys for either IMAGINE & NECS or NECS only villages on either enrollment or learning outcomes. Again, because both evaluations assessed the impacts of packages of interventions, further evaluation would be necessary to under which components of the project, were the key drivers of impacts for girls and how those impacts could be better sustained.

Namibia Quality of General Education Activity (2009-2014)

The Quality of General Education Activity (Education Activity) and the Improving Access to and Management of Textbooks Activity (Textbook Activity) aimed to improve the quality of the Namibian workforce by enhancing the equity and effectiveness of general education. The Quality of General Education Activity rehabilitated nearly 50 Primary and Secondary schools and funded training for school personnel through a cascade-style training-of-trainers approach. The Textbook Activity distributed textbooks to all Namibian schools and supported textbook procurement and management reforms. Both activities were implemented under the $114.3 million Education Project, which also included the Expanding Vocational and Skills Training Activity.

The performance evaluation was based on the review of written reports, key informant interviews, and focus groups as well as descriptive quantitative analysis of project components and student learning outcomes. The evaluation found that test scores improved in lower grades and the improvement was greater than the positive trend in national scores. However, the evaluation found no overall improvement in scores for grades 10-12. Exam scores analyzed by sex showed that girls consistently outperformed boys in English in all grades. However, while girls outperformed boys at younger ages in math, by 10th and 12th grade boys performed better than girls on the learning assessments for both math and science. Given the inconsistent pattern of results in learning assessments and the lack of a true counterfactual, it is difficult to attribute any observed improvements in learning to MCC’s investments. In addition, although the improved school facilities met the standard national building designs, the infrastructure did not match the educational needs for different levels of schools and curricula. MCC could have done further assessments to better adapt the national designs to school needs. There were also concerns about the sustainability of the new textbook distribution as well as the improved teacher-student ratio.

Based on the evaluation findings, the training-of-the-trainers approach did not produce sustainable results. If MCC chooses to implement a training-of-trainers scheme in the future, the activity should begin early enough in the implementation period so that enough trainers have been trained by the end of the compact and the training has been brought to scale to increase the likelihood of success and sustainability. In addition, unreliable financing of school operations and maintenance presented sustainability risks to the textbook, infrastructure, and training interventions.

Georgia II General Education Project (2014-2019)

The $73 million Improving General Education Quality Project (Education Project) aimed to improve the quality of public science, technology, engineering, and mathematics (STEM) education in grades 7-12. The Education Project invested in education infrastructure rehabilitation, with a focus on improving lighting and heat, and construction of new science laboratories in 91 schools. In addition, a one-year sequence of training activities was provided to educators in two co-cohorts in Georgian secondary schools and school directors on a nationwide basis. The project’s evaluation implemented two methodologies: a randomized control trial to estimate the impacts of the Improved Learning Environment Infrastructure Activity (School Rehabilitation Activity), and propensity-score matching along with trend analysis to estimate the impacts of the Training Teachers for Excellence Activity (Teacher Training Activity). Interim results from 2019 are available for both activities.

As part of the School Rehabilitation Activity evaluation, teacher, student, and parent surveys were conducted in 2015 (baseline), 2016-2019 (first follow-up), and 2019-2022 (endline). The interim evaluation found that at the time of the first follow-up survey (2-6 months after construction works were completed), the MCC investment resulted in substantial improvements to the school infrastructure in the treatment group as compared to the control group. In addition, in their responses to the survey, students and teachers agreed that the improvements addressed barriers to using classroom time effectively. A key lesson from the School Rehabilitation Activity is that construction timelines were significantly longer than originally anticipated. Work planning with realistic timelines is critical to future success in school infrastructure implementation.

The evaluation of the Teacher Training Activity found that, one month after the training, many teachers reported higher confidence in their understanding of student-centered teaching practices and creating an equitable learning environment for girls, but most teachers reported that they had not yet begun to employ those new teaching practices in the classroom. For example, only 10 percent of teachers reported developing lesson plans that include differentiated instruction for students who performed at different levels. And only 20 percent of teachers reported adapting their instruction based on students’ response to informal classroom assessments. At the time of one-year survey in 2018 and two-year follow up survey in 2019, Teacher responses still showed mixed results, though there were small positive trends in the take-up of the new pedagogy.

Between the one-year and two-year surveys the Ministry implemented a policy to incentivize early retirement, which provided a natural experiment that suggests the training was more effective for younger teachers. Those teachers that were older, and lower-ranked in the professional development scheme, in large part took the retirement incentives, leaving a younger, more certified, more motivated teacher workforce. Two years after the training sequence, the younger, more certified teachers were more likely to respond that they were using the new student-center practices and had higher confidence in the science-related teaching practices.

There were two evaluation-related lessons that came out of the Georgia General Education Project. The evaluations indicated that stakeholder input is critical to survey module design and made the Teacher Training Activity data more useful to both MCC and the Ministry of Education, Science, Culture and Sport. Because the Ministry was closely involved in the design of survey questions, they were able to measure the improvements to specific teaching practices on which the training had focused. Future evaluations should plan time for an in-country survey design workshop. In addition, the School Rehabilitation evaluation’s use of pairwise randomization implemented by construction phase helped mitigate risks to the evaluation due to uncertainties around the number of treatment schools and construction timelines. This meant that the evaluation maintained the ability to remove schools from the sampling frame if the Ministry decided a school was no longer eligible and depending on the budget for each phase of construction.

Lessons from Investments pending Independent Evaluation Results

MCC’s second compact with El Salvador and the Threshold Program with Guatemala closed relatively recently. As such, final evaluation results are not yet available. However, MCC has valuable lessons from implementation of those programs and some early findings that contribute to the body of knowledge related to general education interventions.

El Salvador Investment Compact Education Quality Activity (2015-2020)

The $85 million Education Quality Activity included two sub-activities intended to improve the quality of the national education system: the Strengthening of the National Education System Sub-Activity, which was designed to improve the effectiveness and quality of the national education system, and the Full-Time Inclusive Model Sub-Activity, which was compromised of a suite of interventions, including extending the school day, teacher professional development trainings, improved data management systems and use of national learning assessment data, training for 300 specialists and 1,400 teachers and principals in gender-inclusive teaching practices, technical assistance to education units in 14 departments in the Coastal Region of El Salvador, and new and improved school infrastructure for 45 school systems. The independent evaluation utilizes random assignment of the integrated school systems, combined with a mixed-methods performance evaluation that assessed the implementation of the activity.

Final independent evaluation results for the Education Quality Activity are expected in 2023. In addition, FOMILENIO II, the local Salvadoran implementing entity, conducted a self-assessment during Compact closeout in 2020 that produced valuable lessons from implementation. FOMILENIO II found that in many ways the initial project design had underestimated the budget and personnel needed to complete the Activity. In addition, FOMILENIO II felt that the plan to extend the school day would have been easier to implement if it had started on a smaller scale at first, with the ability to scale-up the program. Finally, the self-assessment found that coordination between different departments within the Ministry of Education was a challenge to implementation. A more robust inter-departmental coordination effort may have helped the activity have a larger impact.

Guatemala Quality of Education Activity and Strengthening Institutional Capacity & Planning Activity (2016-2021)

The Quality of Education in Support of Student Success Activity (Quality of Education Activity) was one of three activities under the $19.3 million Guatemala Education Project. The activity aimed to implement an intensive 20-month professional development program for 2,400 teachers and school directors, trained pedagogic advisors and 40 management advisors to support schools, form 100 school networks that linked primary schools to lower-secondary schools, and establish 400 parent councils at the lower-secondary level. The Strengthening Institutional Capacity & Planning Activity (Institutional Capacity Activity) aimed to improve the Ministry of Education’s planning and budgeting functions to enable it to provide a more equitable and higher-quality secondary education. The independent evaluation uses random assignment at the school district level to estimate the impacts of the Quality of Education Activity. The evaluation also includes a qualitative study that examines the progress implementing the Institutional Capacity Activity.

Qualitative data collected between March 2020 and June 2021, at end of the teacher professional development program, showed that the Quality of Education Activity was implemented according to plan and that teachers and school directors felt the program was important and, as such, participated in high numbers. In addition, teachers felt that the new teaching practices were more effective than their former pedagogical practices. In the focus groups, teachers commented that they believed they saw improvements in students’ performance in the classroom and were motivated to continue improving their teaching. It should be noted that this is a preliminary finding and will be further assessed during the endline survey.

Early findings from the Institutional Capacity Activity evaluation found that the Ministry of Education supported reforms related to teacher selection and recruitment. However, the legal framework of the ministerial decree on which the reforms are based makes the law susceptible to change through waivers and exceptions, which poses challenges to sustaining the reforms. In addition, the lack of a high-level champion to advocate for the reforms is also a key challenge to long-term success.

Conclusion and Next Steps

MCC has been successful at improving school infrastructure and expanding access to education, although infrastructure maintenance and sustainability remains a challenge. MCC has also had some success in promoting girls’ education and continues to emphasize integrating gender into education programs. In addition, MCC’s education evaluations have been able to demonstrate if a project was successful in improving outcomes and have produced valuable findings. However, because many projects and activities implemented a package of interventions bundled together, MCC’s evaluations have not been able to tell us which aspects of the projects or which combination of interventions have worked.

The key findings have implications for the future of general education investments at MCC. The evaluation findings show a need to further refine the agency’s tools for problem diagnosis to learn about costs, timing, and appropriate alignment of incentives for different actors in the school system. The larger Principles into Practice paper will outline next steps in updating MCC’s practice based on these findings. The paper will address how the Agency can improve its problem analysis, design and implementation, and evaluations of general education projects. It will also explore specific lessons learned in delivering educational infrastructure as well as in effective gender and social inclusion.